OCP25 EMEA Summit Report

1MW racks, 200G LRO, and 100G LRO VCSELs

Co-Packaged Optics: Market and Technology Update

The state of the CPO market and outlook for future progress.

CPO is Turning the Corner

The inevitable technology that hasn’t happened – yet.

OCP24: Optical Gets Invited to the AI Party

OCP24 was all about AI, and optics is an increasingly important part of the AI discussion.

ECOC 2024 Show Report

Nvidia PAM-4 DSP, Ciena 2x 800G-LR, LPO Lives, 1600ZR slipping, L-Band ramping.

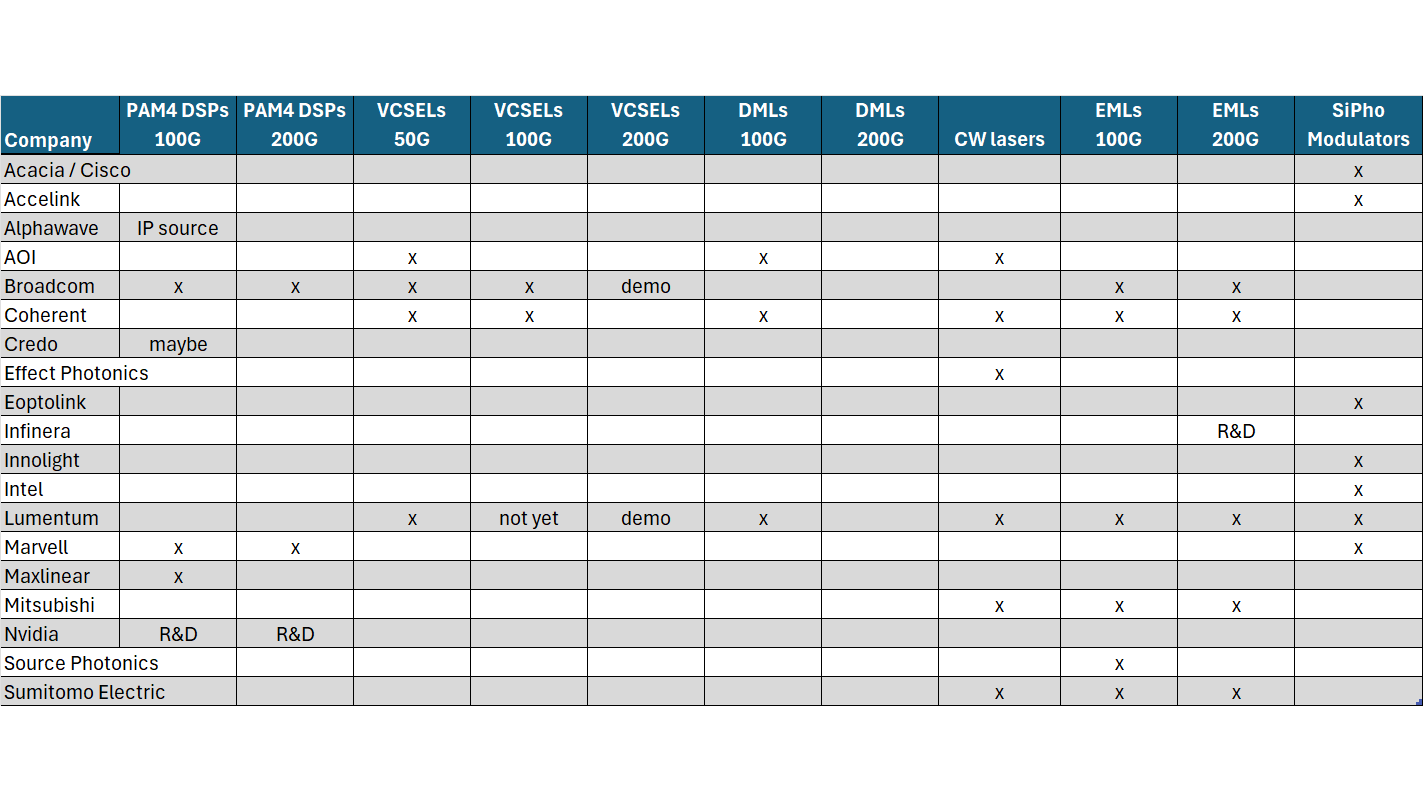

Datacom Optical Industry Tracker

This report tracks the component suppliers that enable the 400GbE+ Datacom Transceiver ecosystem. It includes an overview of the types of transceivers employed, the key components that are used in those transceivers, and which vendors supply those components.

2023 OCP Global Summit Report

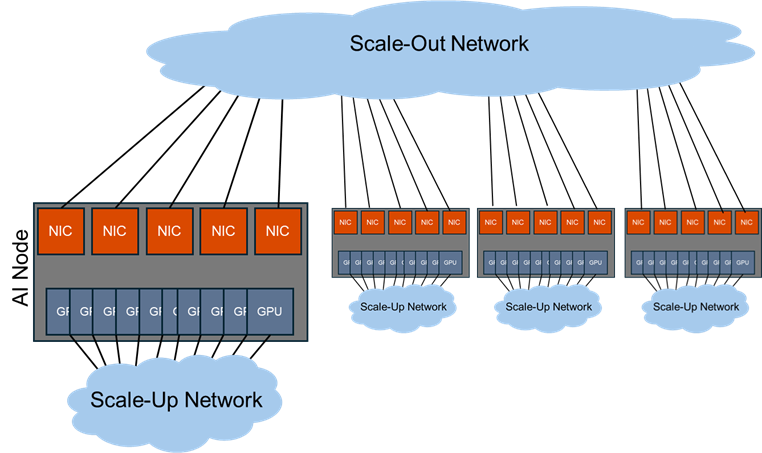

The “AI Emergency”, LPO vs. CPO optics, and pushing the scaling limits of AI computing.

ECOC 2023 Show Report

800ZR, debate on Linear Pluggable Optics and IPoDWDM, and progress in 200G/lane modules

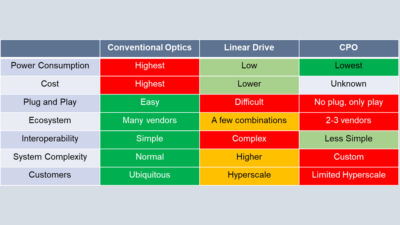

The Linear Drive Market Opportunity

Examines the benefits and challenges of Linear Drive and provides a preliminary assessment of its impact.

1Q23 Takeaways – MRVL CRDO AVGO

The A.I. messaging was strong but datacenter revenue appears to be bottoming.

OFC 2023 Show Report

Key technology takeaways from OFC 2023.

Weekly Earnings – CIEN AVGO CRDO MRVL

Broadcom and Marvell provide radically different revenue outlooks

OFC 2022 Show Report

Key technology takeaways from OFC 2022.

OFC 2022 – Sneak Peek

Here is a sneak peek of the hot topics of discussion at OFC 2022

OFC21: Co-packaged Optics

Co-packaged optics (CPO) moved from hero experiments to discussions of commercial roadmaps this year. In this update from OFC21, we cover the key points about CPO discussed at the show.

OFC2019 – Inside the Datacenter

The next major stop on the road to faster speeds in the datacenter is 400Gbps Ethernet, and activity at OFC2019 centered on making this speed a feasible commercial option.

OFC 2019 Preview and Predictions

The 2019 Optical Fiber Conference is less than a month away. Cignal AI’s analysts have compiled a list of predictions and expectations for what we expect to see from vendors and other OFC participants.

OFC 2018 – 400G Inside the Datacenter

When the conversation at OFC turned to short reach client optics, faster speeds were the central focus. Several companies announced products and gave demonstrations of 400G capable technology. The 100G…

OFC 2017 – Inside the Datacenter

100G and the Road to 400G

The transition to 100G network speeds inside the data center is underway at every major hyperscale operator simultaneously, creating major industry bottlenecks. Despite QSFP28…

Investor Call – OFC 2017 Takeaways

Andrew Schmitt provided key OFC 2017 takeaways during an investor call hosted by Troy Jensen of Piper Jaffray.