The Open Compute Project EMEA Summit 2025 (link) took place in Dublin during April 29-30. This event is 6-months out of phase of the larger OCP Global Summit that takes place each fall in San Jose (see our notes OCP24 – Optical Gets Invited to the AI Party) and is much smaller. Unlike OFC, the OCP puts computing infrastructure first, including topics such as cooling and power.

The objective of the EMEA summit is identical to that of the Global Summit: Assemble industry participants interested in pursuing open computing and networking hardware, but with a bias towards EMEA participants. The 2025 EMEA summit had dedicated tracks for photonics that filled a full day and multiple keynotes from service providers. Cignal AI also had the opportunity to moderate the panel concluding the photonics track. OCP plans to release the presentations and recordings that have detailed presentation data, but here are our observations in the meantime:

- AI cluster sizes need to scale faster. Meta has an objective to grow cluster size by 10x in the next 5 years, and via larger scale out networks achieve a 10,000x jump in GPU compute power. This includes a transition to double-wide racks with hundreds of GPUs and a goal of 1 Megawatt of power per rack. That’s not a typo.

- As expected, Ethernet has won the networking battle. Ultra Ethernet Consortium (UEC) is the go forward plan for scale out among hyperscalers building their own systems. Broadcom acknowledged that while Ethernet is now dominant for scale-out applications it will take time to dislodge NVLink for scale up.

- Power requirements for traditional computing architectures are increasing, but AI rack power requirements are growing much faster. This is forcing a change in power and cooling architectures. These functions will be disaggregated from the compute rack, with dedicated racks for these functions rather than being collocated, where they would occupy valuable space that could be used for more GPUs. This includes a push for centralized 416Vac to +/-400V DC (Mt Diablo architecture) conversion and distribution to compute racks. Project Deschutes is a similar approach for coolant distribution units (CDUs) with a single rack acting as a heat exchanger between multiple compute racks and the facility loop. Google released a paper outlining these initiatives – a good example of how OCP allows participants to propose open hardware architectures outside of the standard compute and networking areas.

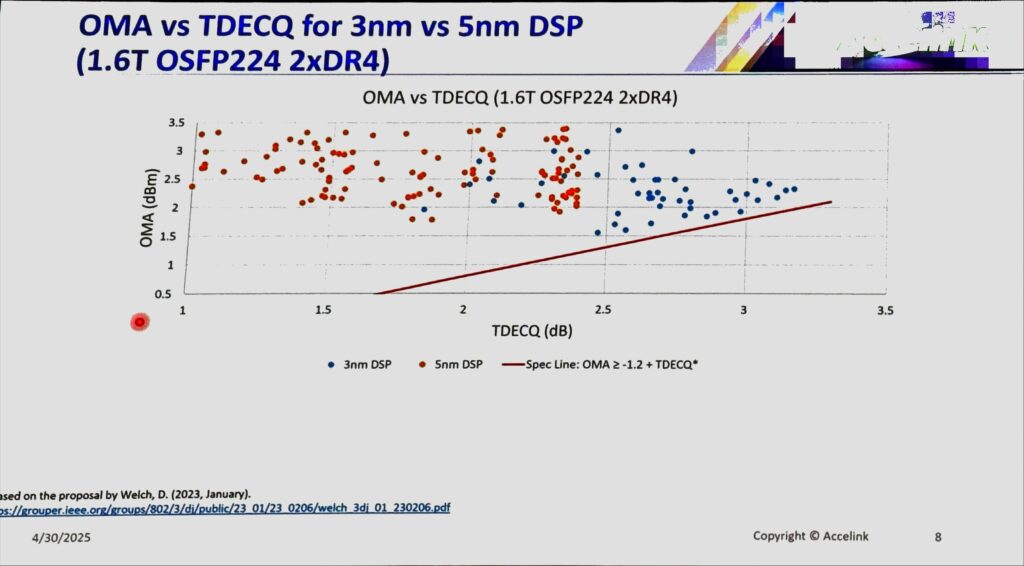

- Accelink shared testing data of 200G/lane 1.6T OSFP transceivers using transmit-retimed only (known as TRO or LRO) optics which reduced power consumption to 16W (10W for LPO) using new 3nm PAM-4 DSPs. It showed data using both 3nm and 5nm DSPs and it was notable that there was significantly less margin with the 3nm DSPs. Accelink also shared similar power consumption information for FR4 based 1.6T optics.

- CIG stated that there were no technical barriers to adoption of CPO at 100G, only operational challenges that could only be solved in a closed, non-interoperable environment. Multiple presenters highlighted the new equalizer circuitry in the Broadcom Tomahawk 6 which increased the transmit FFE taps to 21 from 6 in the previous generation and indicated it would improve LRO performance.

- Huawei presented a novel LRO architecture using 100G/lane 400G optics and VCSELs for AI compute interconnect. It wasn’t confirmed by Huawei, but it had a striking resemblance to the interconnect in the new Huawei Cloudmatrix 384 AI cluster (edit: this VCSEL approach is not used in the 384). Huawei made the point that sometimes a lower tech lower risk approach works well and is well suited to the market constraints they must now operate in. This will be an interesting presentation to re-review once released.

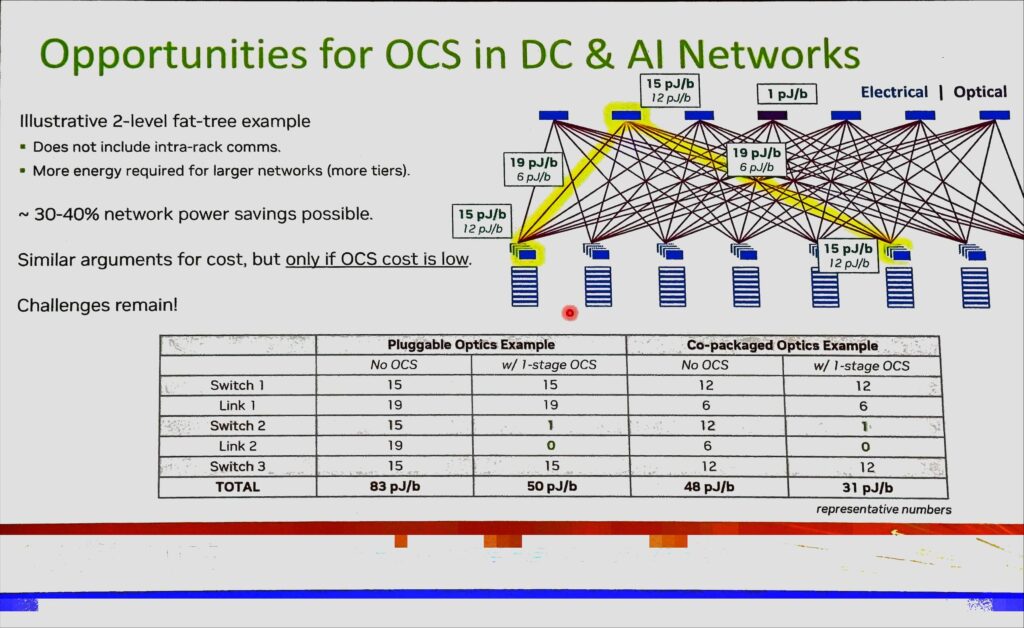

- Optical Circuit Switching (OCS) was mentioned in multiple presentations, but no concrete deployments or novel applications were highlighted. Spanish OCS company iPronics was in attendance, as was new system startup Oriole Networks. Nvidia indicated that some of the developments surrounding SiPho based solutions were interesting, but they wanted to see the technology mature.

- CPO was a hot topic, but one with little new information beyond what was shared at GTC and OFC. Nvidia highlighted that the CPO solution outlined at GTC will yield 9W per 1.6T equivalent – only slightly better than LRO based OSFP solutions. Ben Lee indicated that pluggables were not going anywhere anytime soon, but that CPO was a necessary and strategic step to reduce power and cost. He also pointed out that ultimately CPO would improve reliability through integration.

- Nvidia had an excellent slide showing how CPO combined with OCS eliminating an electrical switching stage cuts total network power by 40% or more, but it also discussed the need for lower cost in the OCS before adoption could take place.

- Sustainability was a major buzzword among many EMEA attendees, with the keynote by OVHCloud focused on a call to action for providers to surface the carbon overhead of specific computing operations via API. This was in stark contrast to western hyperscalers who have publicly said sustainability goals are secondary to scaling computing capabilities as part of the AI race.

OCP EMEA was a useful technical conference. Given the deep detail provided in the slides, it would be beneficial if they could be shared directly here, but unfortunately they are not yet available.